Introduction

Road safety remains a critical global concern, with driver fatigue and distraction contributing significantly to traffic accidents. The Driver Monitoring System (DMS) addresses these challenges through continuous, AI-driven monitoring of the driver’s state. This demonstration aims to illustrate how the DMS uses cutting-edge technology to authenticate drivers, detect signs of drowsiness or inattention, and issue timely alerts, thereby reducing the risk of collisions and improving overall safety.

System Overview

The DMS combines sophisticated hardware and software components to deliver a comprehensive monitoring solution. Its key features include:

Facial Recognition: Authenticates the driver’s identity to ensure authorized vehicle access.

Drowsiness Detection: Employs a custom AI algorithm analysing Eye Aspect Ratio (EAR) and facial expressions to identify signs of sleepiness.

Driver Focus Detection: Tracks eye and head movements to determine if the driver is distracted or looking away from the road.

Fatigue Detection: Recognizes excessive yawning as an indicator of driver fatigue.

Real-Time Alerts: Delivers audible and visual warnings when unsafe behaviours are detected.

Data Logging: Records behavioural data for post-event analysis and reporting.

Haptic Feedback: Activates vibration on the steering wheel to alert the driver during critical situations.

These functionalities work together seamlessly to provide a robust safety net for drivers and passengers alike.

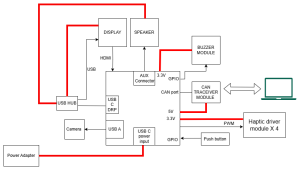

Hardware setup

- Infrared (IR) camera or standard webcam for driver monitoring.

- Processing: AM6254 with four Arm® Cortex®-A53, 3D graphics processing unit (GPU), one Arm Cortex-M4F, two PRU-SS

- AI-enabled computing unit (e.g., an Edge AI device or a laptop with GPU support).

- Speaker for audible alerts.

- Display screen for real-time visualization of system outputs.

- Haptic vibrators integrated into the steering wheel for tactile feedback.

Figure 1: DMS block diagram

The DMS software suite includes:

- AI-powered facial recognition and eye-tracking algorithms.

- A custom graphical user interface (GUI) for intuitive operation.

- Machine learning models trained on extensive driver behaviour datasets.

- A real-time image processing framework for rapid analysis.

- An alert management system to coordinate warnings.

- A driver registration application for user onboarding.

Operation

1. System Initialization

The DMS is powered on, and the camera is calibrated to ensure optimal visibility of the driver’s face under varying lighting conditions.

2. Driver Authentication

The system uses facial recognition to identify and authenticate the driver.

Upon successful authentication, the engine ignition switch is enabled, allowing the vehicle to start.

3. Normal Driving Mode

The system monitors the driver during typical operation.

Continuous analysis of eye movements, head position, and facial expressions ensures real-time assessment of the driver’s state.

4. Drowsiness Detection Test

The demonstrator simulates drowsiness by blinking slowly or closing their eyes for an extended period.

The system detects these behaviours and triggers an immediate audio-visual alert to re-engage the driver.

5. Distraction Detection Test

The demonstrator intentionally looks away from the road for a prolonged duration.

The DMS identifies this inattentiveness and issues a warning to refocus the driver’s attention.

6. Yawning Detection Test

The demonstrator exaggerates yawning movements to simulate fatigue.

The system recognizes this pattern and provides appropriate feedback via alerts.

7. Alert System Demonstration

Detected behaviours are logged and displayed on the screen for review.

Alerts and notifications are analysed to demonstrate the system’s responsiveness and accuracy.

Expected Outcomes

The demonstration is designed to achieve the following results:

Real-Time Detection: Immediate identification and alerting for drowsiness, distraction, and fatigue.

Accurate Authentication: Reliable driver identification through facial recognition.

Effective Feedback: Clear, actionable audio, visual, and haptic alerts to mitigate unsafe behaviours.

Data Insights: Comprehensive logging of driver behaviour for post-event analysis and system refinement.

Technical Approach & Innovations

AI-Powered Image Processing

The proprietary AI models analyze real-time video feeds, extracting key facial landmarks and patterns to determine driver state.

Edge Computing for Real-Time Performance

To reduce latency and ensure instant response, utilizes edge AI processing on embedded computing devices, minimizing dependency on cloud-based computations.

Sensor Fusion Technology

By combining infrared (IR) cameras, accelerometers, and other in-vehicle sensors, the system enhances detection accuracy under varying conditions such as low light and driver position changes.

Adaptive Machine Learning Algorithms

The models continuously improve through data collection and training, ensuring enhanced accuracy over time across different demographics and driving conditions.

Development Challenges and Overcoming Them

Lighting and Environmental Variations

Challenge: Variability in lighting (day/night driving, sun glare) affected accuracy.

Solution: Implemented IR cameras and adaptive brightness algorithms to ensure consistent monitoring.

False Positive Reduction

Challenge: Initial models produced false alarms due to normal facial movements.

Solution: Fine-tuned AI models using diverse datasets to distinguish between natural driver actions and true risk indicators.

Processing Latency

Challenge: Real-time monitoring required fast data processing without internet dependency.

Solution: Integrated edge AI computing to process data on-device with minimal delay.

Driver Variability (Facial Features, Headgear, Glasses)

Challenge: Diverse driver appearances affected recognition accuracy.

Solution: Expanded training datasets with diverse driver profiles and introduced adaptive model tuning.

Benefits and Applications

The DMS offers significant advantages for both individual drivers and fleet operators:

Enhanced Safety: Reduces the likelihood of accidents caused by human error.

Scalability: Can be integrated into a wide range of vehicles, from personal cars to commercial fleets. Helping fleet operators reduce accident rates

Driver Training: Provides actionable data to improve driver habits over time.

Autonomous Vehicle Integration: Complementing self-driving technologies by ensuring driver readiness.

Conclusion

The Driver Monitoring System stands as a pivotal advancement in automotive safety technology. This demonstration underscores its ability to detect and respond to driver fatigue and distraction with precision and reliability. By integrating seamlessly with existing vehicle systems, the DMS has the potential to become a cornerstone of next-generation safety solutions, significantly reducing road accidents and saving lives. Future enhancements could include deeper integration with autonomous driving systems and personalized driver profiles, further elevating its impact on road safety.